It’s a little bit more elaborate and complex. But it’s the gist of it

The base models underlying ChatGPT and similar systems work in much the same way as a Markov model. But one big difference is that ChatGPT is far larger and more complex, with billions of parameters. And it has been trained on an enormous amount of data — in this case, much of the publicly available text on the internet.

In this huge corpus of text, words and sentences appear in sequences with certain dependencies. This recurrence helps the model understand how to cut text into statistical chunks that have some predictability. It learns the patterns of these blocks of text and uses this knowledge to propose what might come next.

An early example of generative AI is a much simpler model known as a Markov chain. The technique is named for Andrey Markov, a Russian mathematician who in 1906 introduced this statistical method to model the behavior of random processes. In machine learning, Markov models have long been used for next-word prediction tasks, like the autocomplete function in an email program.

In text prediction, a Markov model generates the next word in a sentence by looking at the previous word or a few previous words. But because these simple models can only look back that far, they aren’t good at generating plausible text

The newer generative text AI’s. Basically scan multiple pieces of text. And start building word association maps. And based on those maps. Guess which word comes next in the sentence they are writing.

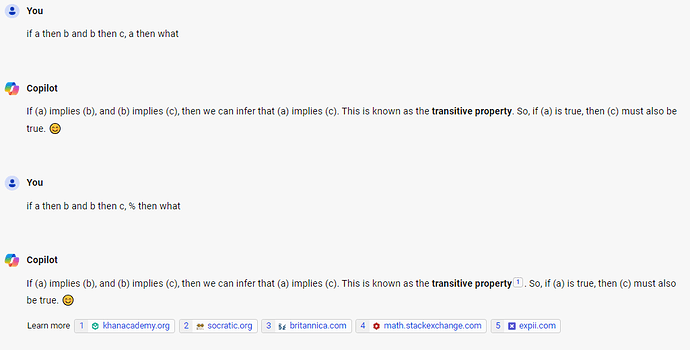

think of it like this.

You write a sentance. You begin with a single word given to you. (the “prompt word” or command). You google that word. And from the first 100 hits on google you look at what word follows the “promp word” and pick the one that occurs most often. Than you google those 2 words you have now, and see what word often follows those 2 words combined. Rinse and repeat.

There are a few other things going on. But the above example is the main principle.

This reliably “mirrors” human writing. Since it is parroting and combining from human written texts.

But it fundamentally has little understanding of the real life meaning of the words it’s using in the generated text.

The generative AI will only suggest you can “stand on a stool to reach a high shelf” if that example is given somewhere in the text it scanned.

While a human could logically think " if i can sit on that chair, it is strong enough to handle my weight. So i could probably also stand on it" and think of standing on a chair to reach a high shelf by itself.

An AI could not. Since it does not “understand” what a chair is. Only how it’s often used in a scanned text. (for example: what words are used in the same sentence as the chair is used in)

source: Explained: Generative AI | MIT News | Massachusetts Institute of Technology